Introduction to the concept of attention in AI

Artificial Intelligence has undergone a transformative journey, evolving from simple algorithms to complex models that can understand and generate human-like text. At the heart of this evolution lies a concept that has redefined how machines interpret data: attention. Imagine being able to focus on specific parts of information while processing it, much like humans do when reading or listening. This idea paved the way for one of the most influential papers in AI history, titled “Attention Is All You Need.” Published by researchers at Google in 2017, this groundbreaking work introduced a revolutionary model known as the Transformer. It changed everything we thought we knew about neural networks and set new standards for performance in natural language processing and beyond. Let’s delve into how this innovation reshaped AI landscape and explore its implications for the future!

The limitations of traditional neural networks

Traditional neural networks have transformed the landscape of artificial intelligence. However, they come with notable limitations that hinder their effectiveness.

One major issue is their reliance on fixed input lengths. This constraint restricts the model’s ability to process sequences of varying sizes, making it less flexible for tasks like natural language processing.

Another challenge lies in handling long-range dependencies. As data moves through layers, vital contextual information can fade away. This often results in a loss of important relationships within the data.

Moreover, training these networks requires significant computational resources and time. The complexity scales rapidly as network depth increases, leading to inefficiencies.

Traditional models lack interpretability. Understanding how decisions are made remains elusive, which presents challenges in applications requiring transparency and trustworthiness.

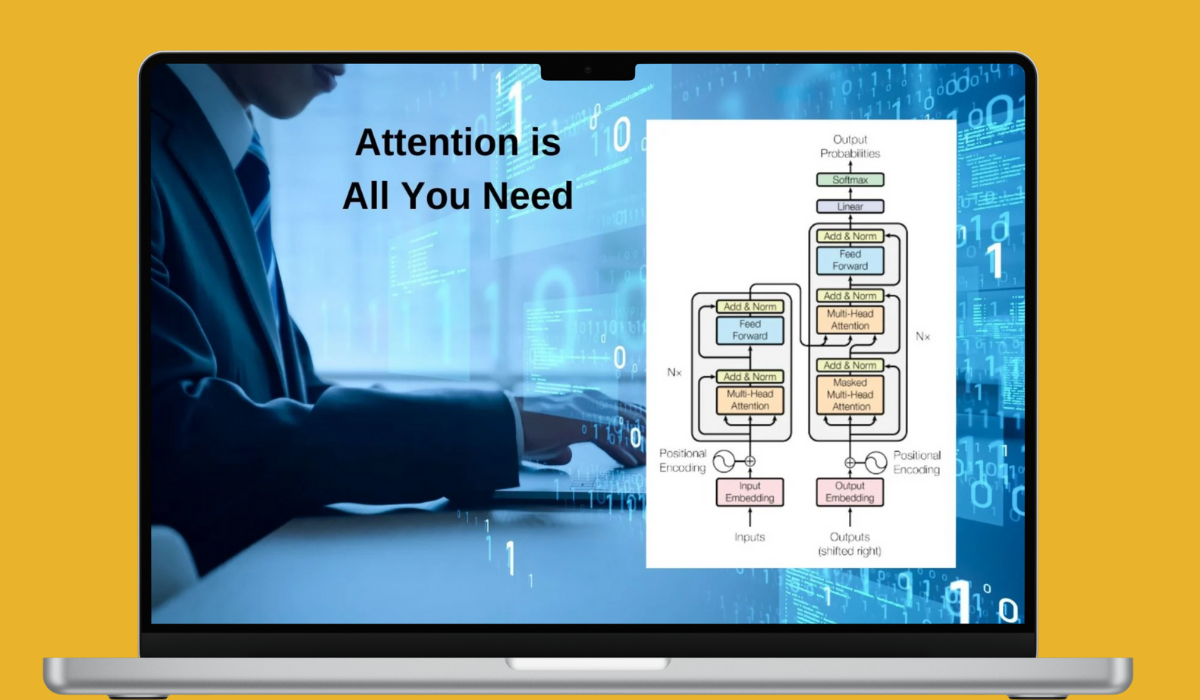

The groundbreaking paper by Google researchers:

In 2017, a team of Google researchers published a paper that reshaped the landscape of artificial intelligence. Titled “Attention Is All You Need,” it introduced an innovative model called the Transformer. This architecture was unlike anything seen before in NLP.

The primary innovation was the self-attention mechanism. It allows models to weigh the significance of different words relative to one another within a sentence. This means that context can be understood more effectively than with traditional methods.

Traditional neural networks struggled with long-range dependencies and sequential processing. The Transformer’s ability to process data in parallel revolutionized this approach, making training faster and more efficient.

This groundbreaking work didn’t just enhance performance; it also provided improved interpretability for AI systems, helping researchers understand how models arrive at their conclusions.

How the paper introduced the Transformer model and self-attention mechanism

The paper “Attention Is All You Need” unveiled the Transformer model, a significant shift in how AI processes data. Unlike traditional sequential models, Transformers leverage parallel processing.

At its core is the self-attention mechanism. This allows the model to weigh input tokens differently based on their relevance. Imagine reading a sentence and focusing more on certain words for better understanding—self-attention does just that for machines.

This innovation enables the model to capture relationships between words regardless of their position in a sequence. The result? Greater context awareness without relying solely on previous states.

No longer bound by fixed sequences, this approach dramatically enhances efficiency and speed in training algorithms. It sparked an evolution across various tasks, from translation to text generation, setting new benchmarks for performance in natural language processing.

Benefits of using attention in AI, such as improved performance and interpretability

The use of attention in AI models brings a transformative edge. With the self-attention mechanism, these models can weigh input data with greater precision. This leads to enhanced performance across various tasks.

Interpretability is another key advantage. Attention mechanisms allow researchers and developers to understand which parts of the input are driving decisions. By visualizing these weights, stakeholders gain insights that were previously elusive.

This transparency nurtures trust among users and fosters collaboration between AI systems and human experts. Improved accuracy contributes to better outcomes in areas like language translation, where nuances matter greatly.

As more industries adopt this technology, the benefits become increasingly evident. Companies leverage attention-driven models for everything from sentiment analysis to content generation, reshaping how we interact with machines daily.

Real-world applications of the Transformer model

The Transformer model has made significant waves across various industries. One of its most prominent applications is in natural language processing (NLP). From chatbots to translation services, the ability to understand context and generate human-like responses has transformed user experiences.

In healthcare, Transformers are being used for analyzing medical texts and improving clinical decision-making. These models can sift through vast amounts of data quickly, identifying patterns that might elude human experts.

E-commerce platforms have also benefited immensely. By enhancing recommendation systems with attention mechanisms, these businesses can tailor product suggestions based on individual customer preferences more effectively.

Moreover, creative fields like music composition and art generation are tapping into this technology. The ability to learn styles and structures enables artists to explore new dimensions in their work while collaborating with AI as a creative partner.

Advancements and developments since the publication of the paper

Since the landmark paper “Attention Is All You Need,” the landscape of AI has transformed dramatically. The introduction of the Transformer model sparked creativity in numerous research areas, leading to innovative architectures like BERT and GPT.

These advancements have pushed boundaries in natural language processing. Models are now capable of generating coherent and contextually relevant text with remarkable accuracy, enhancing user interactions across platforms.

Moreover, fine-tuning techniques have emerged as critical tools for making these models more effective. Researchers continue to explore methods that enhance efficiency without sacrificing performance.

The impact isn’t limited to language tasks alone. Vision Transformers are redefining image recognition by applying attention mechanisms to visual data, opening doors for new applications in computer vision.

As researchers dive deeper into this field, hybrid models combining attention with other techniques promise even greater breakthroughs on the horizon.

Future possibilities and potential impact on fields beyond AI

The implications of the Transformer model stretch far beyond just artificial intelligence. As researchers and developers continue to explore its capabilities, we’re starting to see exciting opportunities arise in fields such as natural language processing, computer vision, and even healthcare.

For instance, the self-attention mechanism allows models to better understand context within data. This can be transformative for applications like medical diagnosis where understanding subtle nuances is crucial. Similarly, in finance, attention-based systems could analyze vast amounts of market data more effectively than ever before.

Moreover, the adaptability of Transformers paves the way for their integration into emerging technologies such as augmented reality and virtual assistants. Imagine a virtual assistant that understands you not just through keywords but by grasping your intent and emotional tone—this could become a reality thanks to advancements stemming from “Attention Is All You Need.”

As we venture deeper into this uncharted territory, it’s clear that the ripple effects of this groundbreaking paper will shape industries well beyond AI itself. The future holds promise for innovation driven by mechanisms designed with attention at their core—a shift that may redefine how we interact with technology fundamentally.